7 dbt Testing Best Practices

An effective testing strategy is key to shipping high-quality data products with confidence. It can help increase team velocity while minimizing thrash and firefighting related to data quality issues. However, given the complexity of data and the abundance of various testing techniques, the task can seem overwhelming. In this post, we cover the best practices for implementing an effective data testing strategy with dbt:

- Shift testing your dbt models to the left

- Build a foundation with generic dbt tests

- Leverage unit testing for complex code logic

- Use data diffing to test for unknown unknowns

- Work smarter, not harder (aka, don’t write every test in the book)

- Always, always test your data during CI

- It sounds obvious, but don’t deploy failed PRs

But before we dive in, let's start by better understanding the different types of dbt tests that exist for your project.

What is dbt testing

Data testing is the process of ensuring data quality by validating the code that processes data before it is deployed to production, and dbt testing aims to prevent data quality regressions when data-processing code (dbt SQL) is written or modified.

Data testing is all about proactively identifying issues before they even happen.

Data testing can help detect and prevent issues like:

- A change in a SQL column definition causing a significant change in a critical business metric

- Code refactoring causing an unexpected change in a KPI downstream

- Column renaming breaking a downstream dashboard or reverse ETL sync to Salesforce

dbt testing vs. data observability: Prevention vs. detection

You’ve probably also heard of data observability, but it's not the same as data testing. Data observability is about continuously monitoring the state of data already in production (e.g., what tables are there and what kind of data is in them) and identifying anomalies.

Observability helps identify live data quality issues in real-time, like identifying if:

- A column has an unusual % of NULL values since yesterday

- An analytics event stopped sending

- A rollup of a revenue column is half of the expected value

While testing and observability are essential pillars of a data quality strategy, focusing on preventing data quality issues with testing allows us to eliminate most of the problems. It makes data monitoring in production more valuable by reducing the noise and thrash of dealing with production incidents.

#1: Shift data quality to the left with dbt testing

Implementing testing allows data quality to be shifted to the left in the development workflow.

With dbt testing, you can validate your data transformations as part of your development cycle, ensuring that any errors or discrepancies are identified before they propagate downstream. This preventative approach not only improves the reliability of your data pipelines but also streamlines the debugging process, saving time and resources in the long run.

As a bonus, by embedding testing into your development workflow, it encourages a culture of higher data quality standards within and beyond your data team.

#2: Add essential dbt tests

It’s often tempting in data testing—just as in standard software development testing—to start with the most complex cases and tests. (After all, the more tests the better, right? Right?) Before long, analytics and data engineers get mired in the minute details of these scenarios, and their test writing gets stalled. Instead, it’s best to start simple.

Start with the most basic tests before moving to more advanced tests that deal with business logic. Begin by validating the structure and assumptions made of the data. If the basic assumptions of the data are wrong, this will affect the usefulness of any advanced tests built on top of these assumptions.

Example - eCommerce

We’re going to use an ecommerce store selling widgets as an example to illustrate best practices. A sales manager comes to us asking which widgets were sold when and for how much to determine revenue by product per month. We have our transaction data in a fact table and our product information in a dimensional model, table but how do we know this data will stay reliable over time?

Let's take a closer look at how a proper dbt testing strategy can help us prevent many common problems when delivering and querying such data.

Transactions Table

Product Table

Analytical query: "Monthly Product Sales Report"

The following query illustrates a common analytical scenario for how this data is queries for analysis used.

The result of this Monthly Product Sales report SQL query would look like this:

dbt generic tests

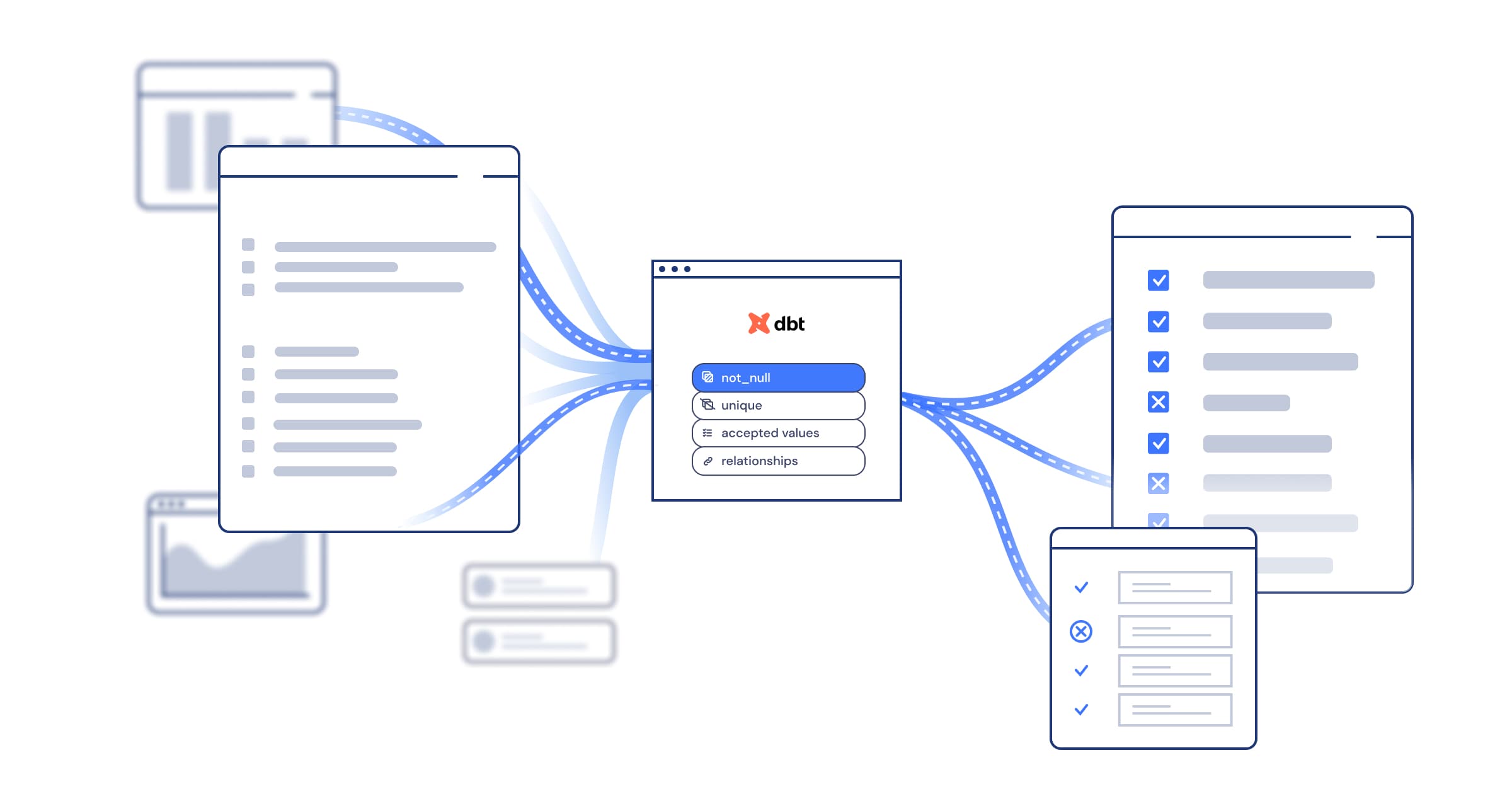

A good place to start is to implement generic dbt tests – modular, reusable tests for ensuring data reliability and integrity. dbt has four generic tests that come out of the box, and they can be deployed with minimal configuration:

1. not_null: A null value in data indicates that a value in a column is missing or unknown. While sometimes null values are intentional and desired, they often suggest a data issue. This kind of test identifies where null values exist in a given column. Adding a not_null test to the primary key for every model to ensure PKs are not null is a great way to avoid gnarly (and often easily preventable) data quality incidents.

For example, our imaginary Monthly Product Sales report would likely be affected by NULL values in the product_id column. If the “product_id” column contained NULL values, those sales would likely be omitted from an analytical query.

2. unique: This test ensures that a dataset does not contain duplicate values or rows. This is important because duplicate primary keys can lead to massive issues, causing fanouts in joins and misleading data metrics.

The example dim_products table is particularly susceptible to duplicate “product_id” values, which would seriously impact our analytical query. If duplicate values existed, this would inflate the numbers reported in our Monthly Product Sales because each row in our fact_transactions table would be duplicated during the table join.

Without this example unique test for our dim_products table, we could end up with duplicate product_id records. For example, we might have a scenario in which product_id 55 is reused in the table.

When our analytics query runs to populate the Monthly Product Sales report, the join between the dim_products and the fact_transactions tables now inflates our sales numbers by duplicating all records in fact_transactions that have a product_id of 55.

3. accepted_values: This test verifies that all column values across all rows are in the set of valid values. For example, our product_category column might only have two valid values (AB100, NB500). If this test uncovers any values in the product_category column that are not one of these two, it will fail.

4. relationships: This test verifies referential integrity, ensuring all of the records in a table have a corresponding record in another upstream table that is expected to be joined to the former (or simply is considered the source of truth). We could use this test to check that all product_id values in our fact_transactions table exist in the dim_products table.

For example, it would be a common data bug if new sales were recorded in our fact_transactions table with a product_id that did not exist in our dim_products table.

The above example shows that product_id 01 does not exist in dim_products. As a result, our Monthly Product Sales report will underreport our sales. This is why relational integrity checks are so important for catching hard-to-detect problems introduced into a dataset.

Custom generic dbt tests

When more complex logic or validation criteria are needed, data practitioners can write their own custom generic tests. These tests are written with Jinja, a templating language combined with SQL, as part of your dbt project in either your /tests or /macros subdirectories.

When examining our table fact_transactions and the Monthly Product Sales analytics, along with the basic dbt testing we already implemented, data issues and business rules might not fit well into these simple tests.

For example, let’s say the business requires we have no orders for over 500 units, as the company cannot fulfill such large orders with current inventory levels. This is the perfect use case for customer generic tests.

We could write the following custom test for verifying that none of the orders coming into our fact_transactions table exceed the 500 unit order quantity:

This custom generic test can now be used in a model:

Singular tests

One can write a singular test when a testing scenario is unique to a mode. Such a test is based on a specific SQL statement, which should return no records if successful and otherwise return failing records. For example, ensure that no order has a negative order quantity:

Tip: Use extensions for writing dbt data tests faster

As an open-source framework, dbt is easily extensible with packages that can be discovered through dbt Package hub. Two packages can be beneficial in simplifying the writing of data tests, including dbt-expectations and dbt-utils.

#3 Add unit tests for complex SQL logic

dbt unit tests are dbt tests that validate the model logic with predefined inputs and outputs.

Unlike a "classic" dbt data test, which runs an assertion against a model built with database data, unit tests require the developer to define "test cases" with input data and expected output. Thus, they allow more granular testing and isolate the validation of source data from the validation of the model code itself.

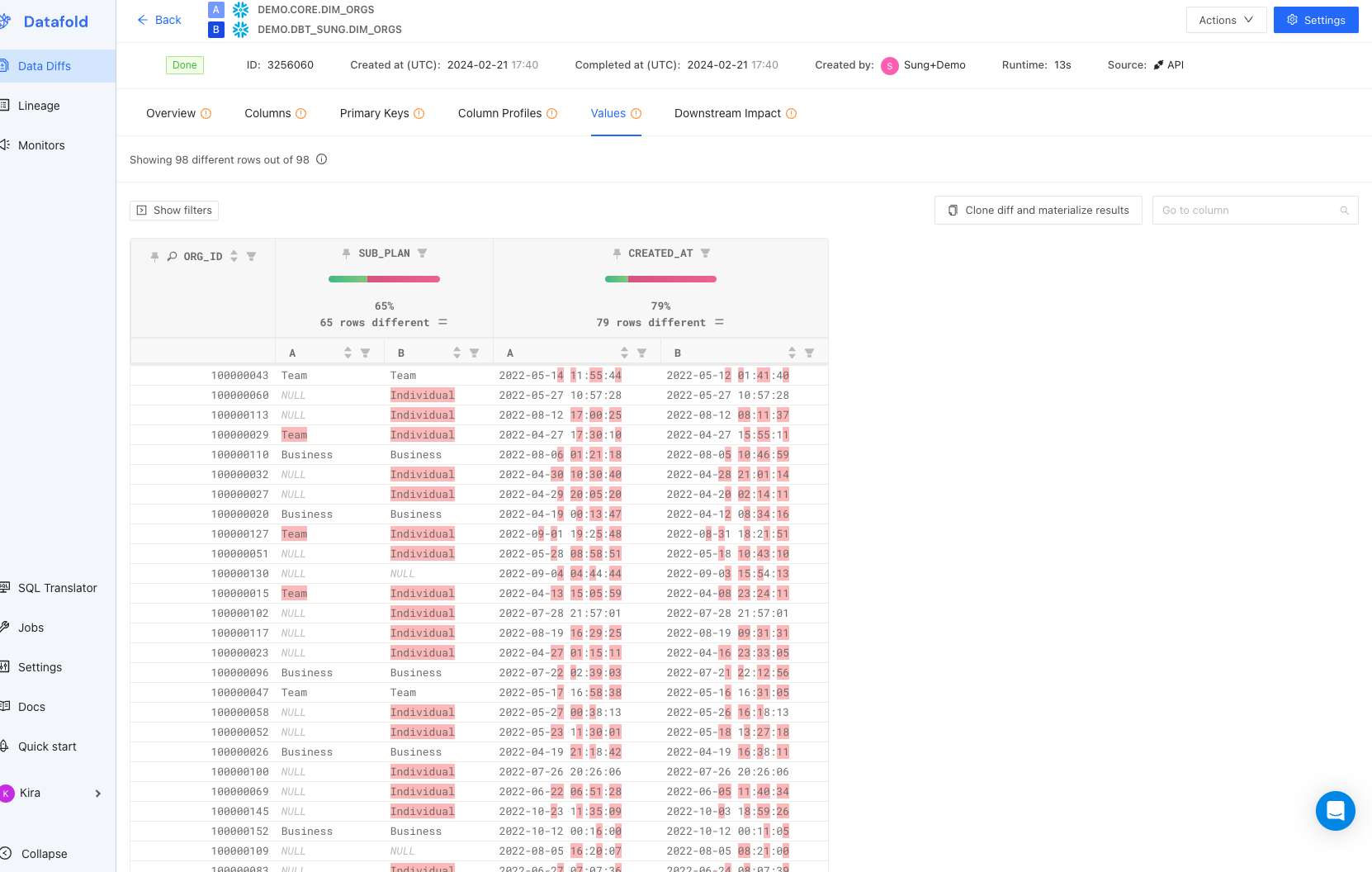

#4 Implement data diffing to achieve 100% test coverage

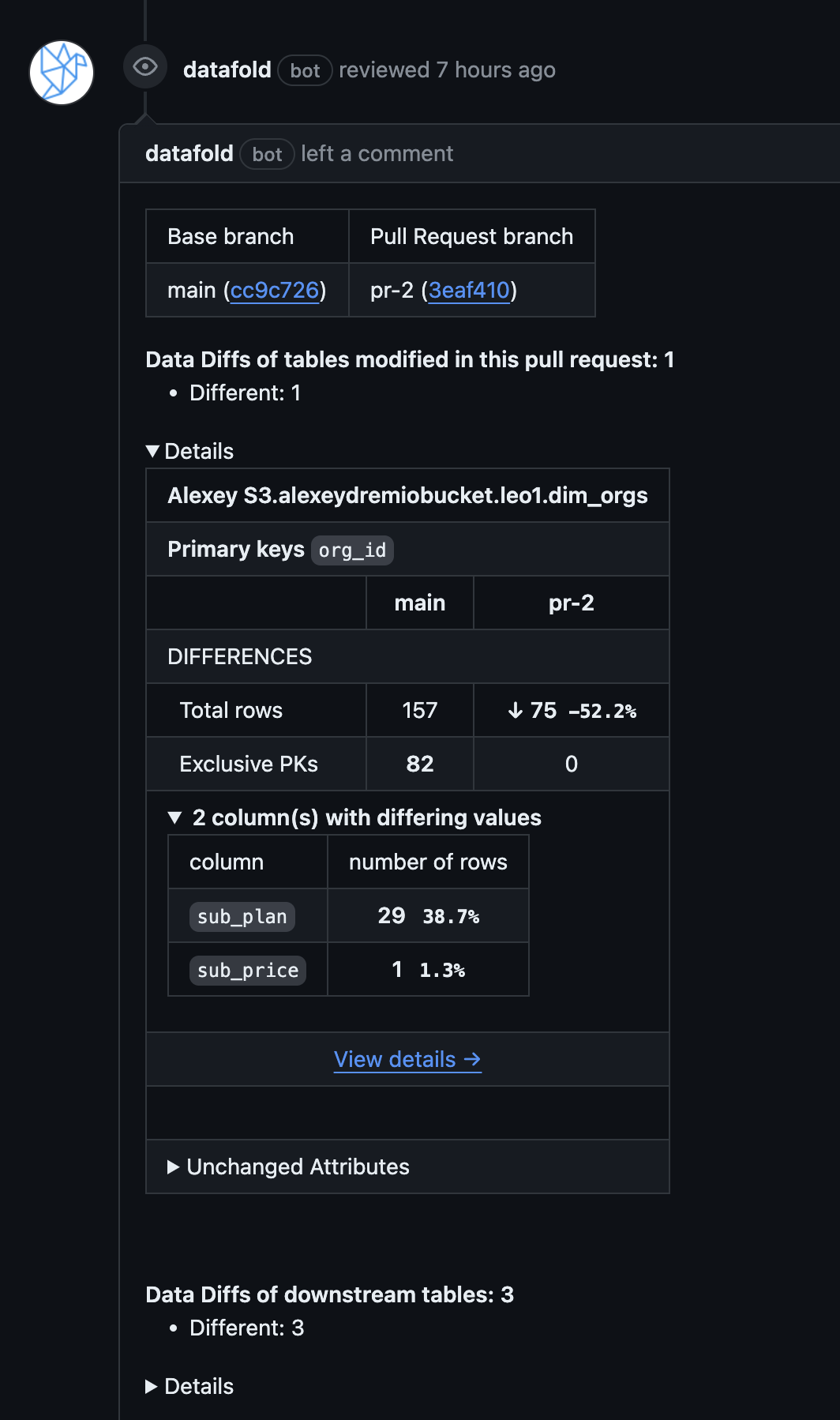

Data Diff is a value-level and statistical comparison of datasets. When developing and deploying your dbt models, data diff can be leveraged to compare the data in production (the ground truth) and development/staging, allowing the developer and the code reviewer to fully assess the nature and impact of changes to the business logic.

Implemented in CI and coupled with column-level lineage that extends to BI integrations, data diff safeguards against unforeseen changes, reducing the time needed to review and test the code and accelerating the overall team velocity.

# 5 Apply each test type smartly (aka work smarter, not harder)

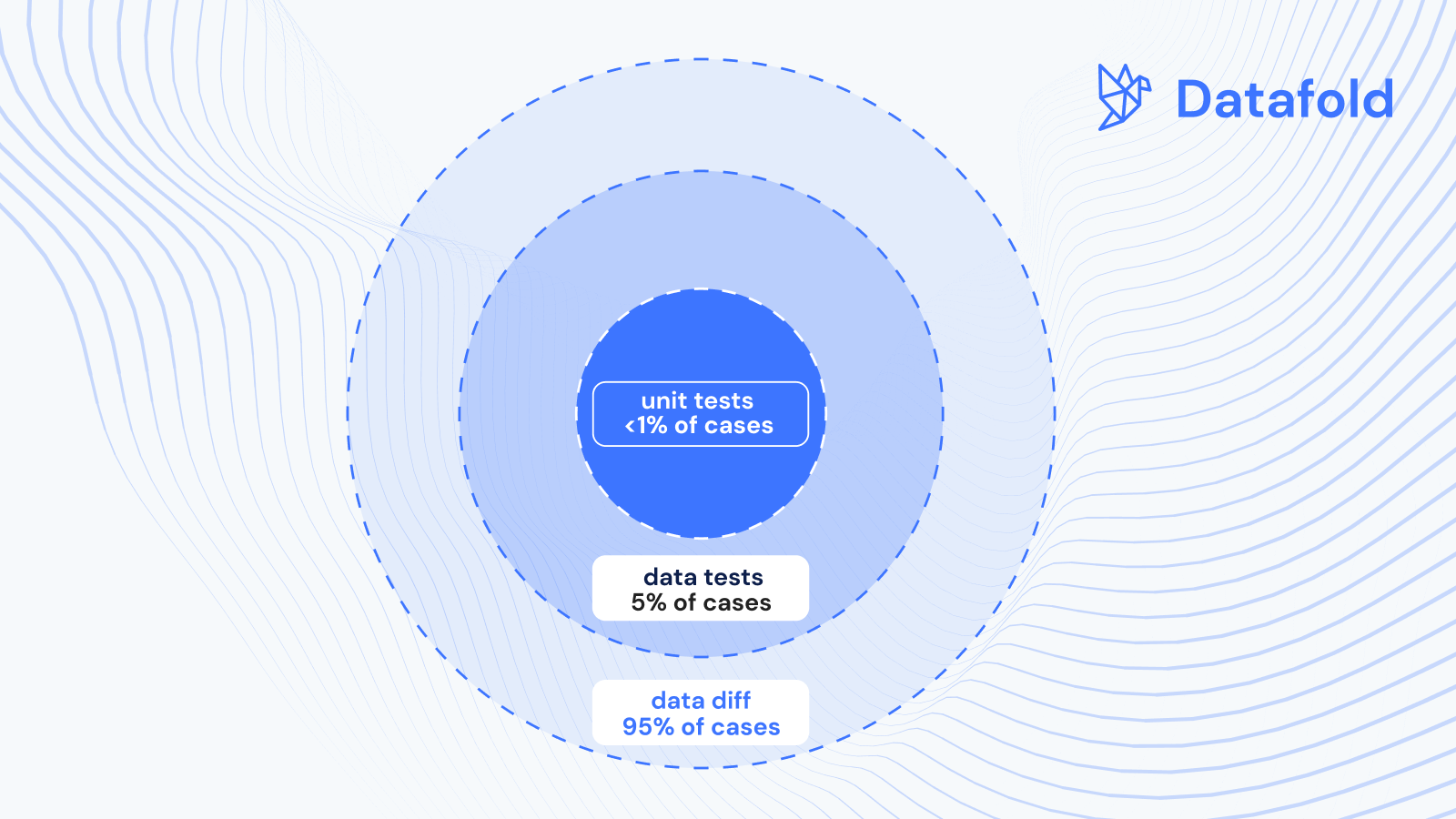

This post covered three testing types: dbt data tests, dbt unit tests, and data diffing. Knowing when each testing technique is appropriate and understanding its powers and limitations is essential to implementing an effective testing strategy that utilizes all three types of dbt testing to maximize each method’s impact and ROI.

For that, let’s compare each testing type in terms of implementation effort, coverage, specificity and ideal application:

Considering the relative advantages of each testing type, the following simple rules help create an effective data quality strategy:

- Write unit tests for a small share of critical/complex business logic – they are harder to implement but are the most precise type of testing.

- Write data tests for essential quality checks, such as checking for uniqueness, non-null, referential integrity and other basic expectations of your models. Data tests are easy to add for simple testing cases.

- Use data diff for most “unknown unknowns” that make up data quality issues. By highlighting how the data changes when the code is modified, data diff helps you catch the long tail of unexpected data quality issues and regressions that wouldn’t be picked up by traditional data or unit tests.

#6 Run tests in CI to provide guardrails

CI stands for Continuous Integration, a software development practice where developers frequently merge code changes into a shared repository. Each proposed integration (pull/merge request) can then be verified by an automated build and automated tests. In data and analytics engineering, CI aims to improve data quality and team velocity and reduce the time it takes to validate and deploy dbt code updates.

In other words, CI is an automated process that runs all tests and validations for every proposed code change before it’s merged and deployed to production.

I have dbt tests, why do I need CI?

Having dbt tests is like having a fire extinguisher at home. It’s useful for tackling fires when they occur, but it relies on your vigilance and immediate action to be effective. On the other hand, having CI is like having a comprehensive home security system. It not only detects fires but also takes proactive measures to suppress them, alerts emergency services, and operates round-the-clock, providing peace of mind and enhanced protection for your data environment.

While dbt data and unit tests are examples of validation techniques that can be applied to code changes, having those tests defined in the dbt project without CI means relying on each developer to execute all required tests manually, ensuring successful results and addressing issues before merging the code, which is a highly brittle process. As the complexity of dbt project grows beyond a few models and contributors, slips are inevitable and the negative impact can be significant.

Running your dbt build (which will run your dbt tests) and data diffs during your CI process ensures every single PR undergoes the same standard of testing (and scrutiny), creating a more governable system for code changes.

#7 Never deploy with failing tests

This advice stems from the Broken Window Theory, is a criminological concept proposed by James Q. Wilson and George L. Kelling in the early 1980s and that significantly influenced policing and urban policy in New York City, particularly during the 1990s. The name comes from a metaphor that a window left broken in a community shows an apparent lack of care and attention, thereby fostering an environment where people feel more inclined to break more windows or commit more serious crimes.

In the context of dbt testing, this means that the team should ensure that tests are always passing and never deploy with failing tests. It is easier said than done because in a large dbt project with hundreds/thousands of models, the probability of a particular test failing is quite high. Yet, if you once proceed with merging despite a failing test “we all know this one test is noisy, so we just been ignoring it”, you step (and set your team) on a slippery slope of allowing more and more tests to fail eventually leading to tests not being taken seriously and significant data quality issues slipping through.

How to avoid broken tests

It’s quite simple. When a data or a unit test fails, you have following options:

- Investigate the test

- If it's a dbt data test, check if it's failing because of a change in the underlying data

- If it's a dbt unit test, that means you introduced a code change that breaks previous assumptions of how the code should work. - Ask your team

- Check the model and test owner, or do a git blame if the owner is unclear, to consult with whomever wrote the original code and test. - Fix the code or the test

- Change your code if you believe that the test condition is relevant.

- Change the test itself if the expected output is now different.

- Remove the tests; some tests are inherently noise, poorly-defined, or redundant. It's ok to remove a test if you believe it's not adding incremental value.

Conclusion

Let’s take a step back for a second.

Optimizing for data quality has been (and likely will remain) an ongoing battle for data practitioners. We can list the best ways to safeguard your dbt project and analytics work from data quality issues, but we acknowledge that no data team or dbt project is perfect. Tests will fail. PRs may need to be reverted, and hotfixes added. And data quality issues will still exist.

What we can control, however, as data practitioners is adopting best practices over time that enforce scalable and standardized testing methods that grow with your team and data. So one day, those data quality issues might exist, but maybe they don’t happen as often or on nearly as large of a scale :).

So to summarize, for your dbt project, we recommend:

- Shifting data quality checks to the left

- Adding dbt generic tests for your important columns

- Adding unit testing to validate your more complex code logic

- Implementing data diffing to safeguard against all unknown unknowns

- Not overdoing it: Not every single column needs every single test; save your team’s time and your warehouse’s compute costs

- Implementing CI; we cannot emphasize this enough, this is the singular best practice that will enable your team to find data quality issues before they enter your production space

- Creating a solid foundation, and avoiding deploying failed PRs

Datafold is the fastest way to validate dbt model changes during development, deployment & migrations. Datafold allows data engineers to audit their work in minutes without writing tests or custom queries. Integrated into CI, Datafold enables data teams to deploy with full confidence, ship faster, and leave tedious QA and firefighting behind.